Advanced Skills - Statistics

When you have a continuous independent variable, it is normal to look for relationships between the independent variable and the dependent variable.

These relationships can be of a number of different types:

As the independent variable increases, the dependent variable also increases.

As the independent variable increases, the dependent variable decreases.

And so on...

As with comparing means, there are two types of test. In general, it is preferable to use the actual data values you have collected to look for relationships. These tests are generally referred to as parametric tests and are generally more powerful (i.e. better) than non-parametric tests which rank your data into order, before examining relationships. Essentially, ranking your data is an extreme data transformation. You should be familiar with data transformations, especially if your dependent variable is a direct count (e.g. number of individuals), or a percentage. However, in many cases, you don't need to worry unduly about data transformations.

The premise of tests which look for relationships is as follows:

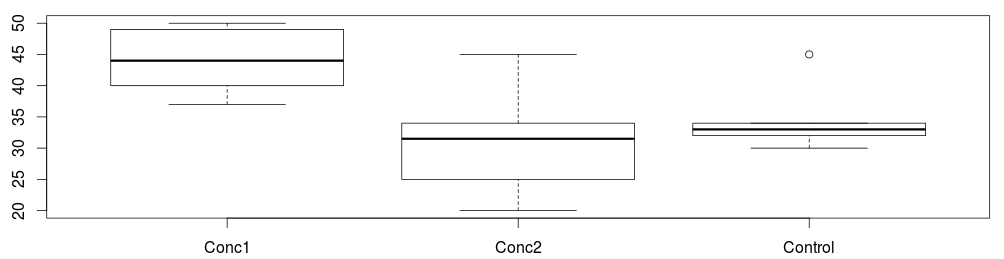

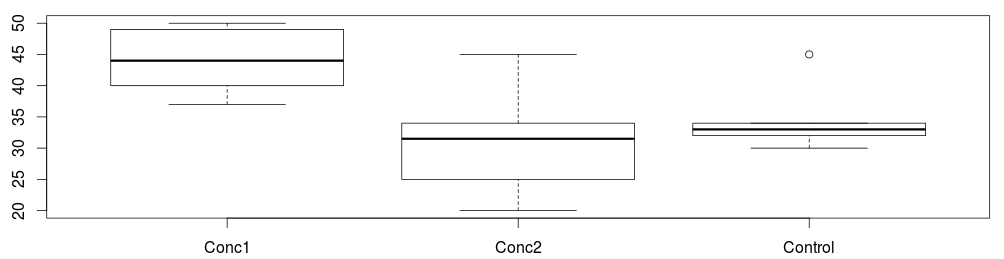

As you learned last year, if you plot data on a scatter plot, you can then plot the line of best fit through the data. A statistical test to compare relationships essentially looks at how much of a better fit that line of best fit is, as compared to a horizontal line drawn through the mean of all the dependent variable points. If the fit is much better, then the relationship is said to be significant.

When we compared means, there was no information on 'how different' the means were. The difference could be small, but still significant. However, with relationship statistics, you not only get a p value, telling you if the relationship is significant, you also get an r or r2 value, telling you how strong the relationship is.

If you get an r value, from a correlation, it will range between -1 (a perfect negative correlation, as one variable increases, the other decreases) and 1 (a perfect positive correlation, as one variable increases, the other also increases). A value of zero means no relationship at all.

If you get an r2 value (from a regression), then it will range between 0 and 1. 0 indicates no relationship, and 1 a perfect relationship (all points fit on a line). You can easily transform between r and r2 by squaring the r value. This will make it smaller, but always positive.

For data collected in field conditions an r2 of 0.4 or more is considered a relatively strong relationship. For data collected in the lab the values would normally be higher and an r2 of more than 0.7 may be considered a strong relationship.

It is possible to have a significant regression with very low r2. This would be classed as a significant, but weak relationship. Also, it can be possible (although less likely) to have a high r2, but a non-significant regression or correlation. This would simply be described as a non-significant relationship.

The above example indicates it is important to consider the p value first, then the r2 after this - if it is not a significant relationship, ignore the r2 value.

Technically a 'regression' implies cause and effect. The independent variable should be thought to cause the change in the independent variable. It doesn't actually test for cause and effect, but this is an assumption of a regression. For example CO2 levels may alter global temperature. As such, it would be possible to test if there is a relationship between CO2 (the independent variable) and temperature, the dependent variable.

Correlations are used when there is no logical reason to expect cause and effect between two variables. For example, shoe size and height are likely correlated, but one does not cause the other. Both are related to the same genetic and environmental factors.

It is normally best to begin with a regression, as it is much easier to test the assumptions of regression by drawing a graph of the 'residuals'. If these assumptions are broken, then a Spearman Rank Correlation - a non-parametric test, can be performed.

A video of how to determine if data assumptions are met for parametric tests is given here.

The data file for examples of running all of these tests is here for SPSS analysis and also as a .csv file here, for analysis in R.

Video of how to perform analysis in SPSS are here. This includes how to test the assumptions of a parametric test.

Video of how to perform analysis in R are here. This includes how to test the assumptions of a parametric test. You can download the R script file here.

Back Next

The output from regression and correlation in SPSS can be very confusing. Make sure you know which tables you are supposed to look at by following the guidelines in the videos and on the respective webpages.

Other tests